Google Is Crawling a Bad Review From a Site That Is No Longer Available

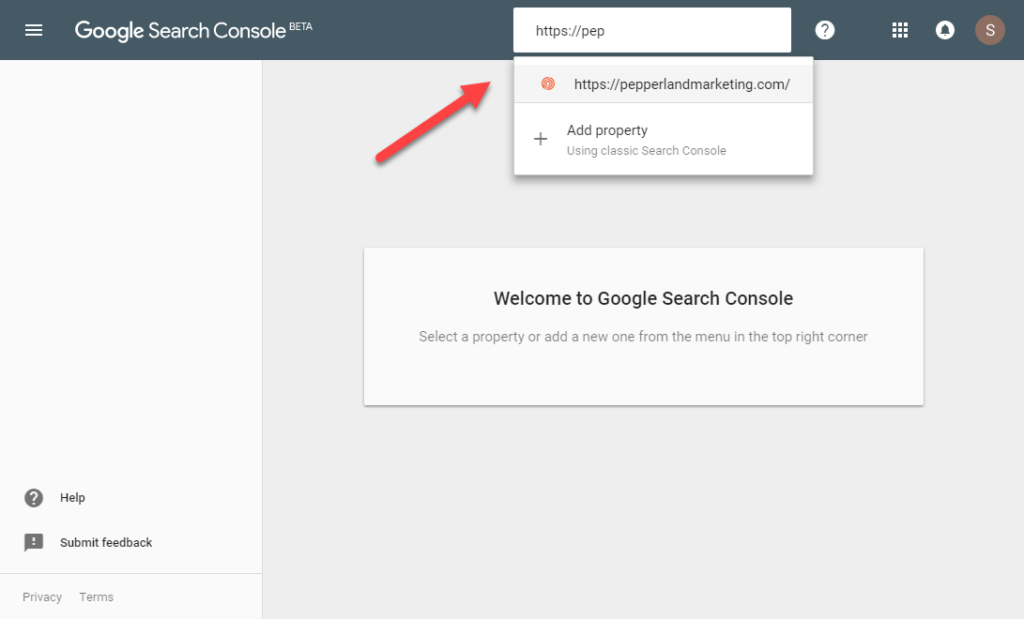

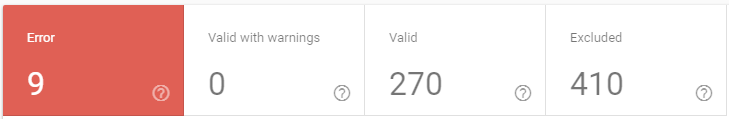

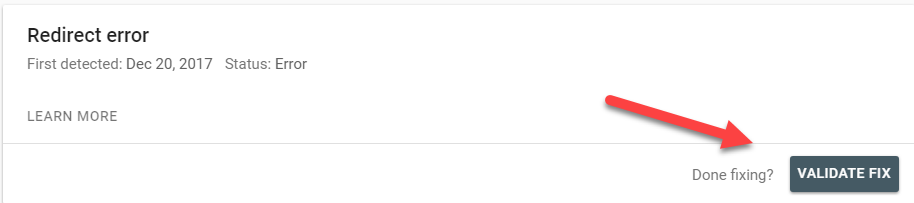

Google Search Panel is a free awarding that allows you to identify, troubleshoot, and resolve any bug that Google may encounter as it crawls and attempts to index your website in search results. Information technology can also give you a window into which pages are ranking well, and which pages Google has chosen to ignore. In the video below, nosotros offer a quick high-level overview of the tool and reports Google Search Console provides: One of the most powerful features of the tool is the Alphabetize Coverage Written report. Information technology shows you a list of all the pages on your site that Google tried to crawl and index, along with whatsoever issues it encountered along the mode. When Google is crawling your site, it means that your pages are existence discovered and looked at to determine if their data is worthy of being indexed. Indexing means that those pages have been analyzed past Google'due south crawler ("Googlebot") and stored on index servers, making them eligible to come up for search engines queries. If y'all're not the most technical person in the globe, some of the errors y'all're likely to encounter there may exit you scratching your head. We wanted to brand it a bit easier, so nosotros put together this handy gear up of tips to guide you along the way. Nosotros'll also explore both the Mobile Usability and Cadre Spider web Vitals reports. Earlier nosotros dive-in to each of the issues, here is a quick summary of what Google Search Console is, and how you can get started with it. First things first: If you haven't already done so, you'll desire to make sure you verify buying of your website in Google Search Console. This step is one we highly advise, as it allows you to see all of the subdomains that fall under your main site. Be sure to verify all versions of your domain. This includes: Google treats each of these variations as a separate website, so if you do non verify each version, there's a good chance you'll miss out on some important information. One time you've verified your website, navigate to the property you would like to kickoff with. I recommend focusing on the main version of your website beginning. That is, the version you see when you try visiting your website in your browser. Ultimately, though, y'all'll want to review all versions. Notation: Many sites redirect users from ane version to another, so there's a good run a risk this is the only version Google will be able to crawl and index. Therefore, it volition accept the majority of issues for you to troubleshoot. Y'all'll encounter a dashboard showing your trended performance in search results, index coverage, and enhancements. Click Open REPORT in the upper right-manus corner of the Index coverage nautical chart. This is where y'all can do a dive-deep into all of the technical issues that are potentially preventing your website from ranking higher in search results. There are four types of issues: Error, Valid with warnings, Valid, and Excluded. In the department below, we'll walk through each of the potential bug y'all're probable to discover here in layman's terms, and what you should practise about them. The list comes from Google'due south official documentation, which we strongly recommend reading as well. One time you lot believe you have solved the issue, you lot should inform Google by following the issue resolution workflow that is built into the tool. We've outlined the steps for doing this towards the bottom of the article. Are Google Search Panel errors your only SEO issue? Download our 187 signal self-audit checklist! If Google'southward crawler, Googlebot, encounters an issue when it tries to crawl your site and doesn't empathize a page on your website, it's going to give up and motion on. This means your page will not exist indexed and will not be visible to searchers, which greatly affects your search performance. Here are some of those errors: Focusing your efforts hither is a bully place to first. Your server returned a 500-level mistake when the page was requested. A 500 error ways that something has gone wrong with a website's server that prevented it from fulfilling your request. In this case, something with your server prevented Google from loading the page. First, cheque the page in your browser and come across if you're able to load it. If yous tin, there's a proficient chance the consequence has resolved itself, but yous'll want to confirm. Email your Information technology team or hosting company and ask if the server has experienced any outages in contempo days, or if there's a configuration that might be blocking Googlebot and other crawlers from accessing the site. The URL was a redirect error. Could be ane of the following types: it was a redirect concatenation that was too long; it was a redirect loop; the redirect URL eventually exceeded the max URL length; there was a bad or empty URL in the redirect chain. This basically means your redirect doesn't work. Go ready it! A common scenario is that your primary URL has changed a few times, so at that place are redirects that redirect to redirects. Example: http://yourdomain.com redirects to http://world wide web.yourdomain.com which so redirects to https://www.yourdomain.com. Google has to crawl a ton of content, and then it doesn't like wasting time and endeavour crawling these types of links. Solve this by ensuring your redirect goes straight to the terminal URL, eliminating all steps in the eye. Y'all submitted this page for indexing, but the page is blocked by robots.txt. Try testing your page using the robots.txt tester. There is a line of code in your robots.txt file that tells Google information technology's not allowed to crawl this page, fifty-fifty though y'all've asked Google to do just that by submitting it to be indexed. If you do actually want it to be indexed, find and remove the line from your robots.txt file. If you don't, check your sitemap.xml file to see if the URL in question is listed there. If information technology is, remove it. Sometimes WordPress plugins will sneak pages into your sitemap file that don't vest. You submitted this page for indexing, merely the page has a 'noindex' directive either in a meta tag or HTTP response. If you want this page to exist indexed, yous must remove the tag or HTTP response. You're sending Google mixed signals. "Index me… no, DON'T!" Check your page'south source lawmaking and look for the word 'noindex'. If you see information technology, get into your CMS and expect for a setting that removes this, or discover a mode to modify the folio'due south code straight. Information technology's too possible to 'noindex' a folio through an HTTP header response via an 10-Robots-Tag, which is a fleck trickier to spot if you're not comfortable working with programmer tools. Y'all tin can read more most that here. four. Click "Update" once you're washed. Yous submitted this folio for indexing, only the server returned what seems to be a soft 404. These are pages that wait like they are broken to Google, but aren't properly showing a 404 Non Establish response. These tend to bubble upwards in two means: You should either catechumen these pages to proper 404 pages, redirect them to their new location, or populate them with some existent content. For more on this issue, be certain to read our in-depth guide to fixing Soft 404 errors. Yous submitted this folio for indexing, but Google got a 401 (not authorized) response. Either remove authorization requirements for this page, or else allow Googlebot to access your pages by verifying its identity. This warning is usually triggered when Google attempts to crawl a page that is only accessible to a logged-in user. You lot don't desire Google wasting resources attempting to crawl these URLs, so you should try to observe the location on your website where Google discovered the link, and remove information technology. For this to be "submitted", it would need to be included in your sitemap, then check there commencement. You lot submitted a non-real URL for indexing. If you remove a folio from your website but forget to remove it from your sitemap, y'all're probable to meet this fault. This can exist prevented from regular maintenance of your sitemap file. Deep Dive Guide: For a closer expect at how to prepare this fault, read our commodity about how to fix 404 errors on your website. You submitted this page for indexing, and Google encountered an unspecified crawling error that doesn't fall into whatever of the other reasons. Endeavor debugging your page using the URL Inspection tool. Something got in the way of Google'due south ability to fully download and render the contents of your folio. Try using the Fetch as Google tool equally recommended, and look for discrepancies betwixt what Google renders and what you lot run into when you load the page in your browser. If your page depends heavily on Javascript to load content, that could be the problem. Most search engines still ignore Javascript, and Google nevertheless isn't perfect at it. A long page load time and blocked resource are other possible culprits. Warnings aren't quite as astringent as errors but yet crave your attention. Google may or may not make up one's mind to index the content listed here, but you can increase the odds of your content existence indexed, and potentially improve your rankings if you resolve the warnings that Google Search Panel uncovers. The page was indexed, despite beingness blocked by robots.txt Your robots.txt file is sort of similar a traffic cop for search engines. It allows some crawlers to become through your site and blocks others. You can block crawlers at the domain level or on a page by page basis. Unfortunately, this specific alarm is something we see all the time. It usually happens when someone attempts to block a bad bot and puts in an overly strict dominion. Below is an example of a local music venue that we noticed was blocking all crawlers from accessing the site, including Google. Don't worry, we let them know nigh it. Fifty-fifty though the website remained in search results, the snippet was less than optimal because Google was unable to see the Title Tag, Meta Description, or page content. So, how do y'all set up this? Nigh of the time, this warning occurs when both of these are present: a disallow command in your robots.txt file and a noindex meta tag in the page'due south HTML. Y'all've used a noindex directive to tell search engines that a page should non be crawled, merely you've besides blocked crawlers from viewing these pages in your robots.txt file. If search engine crawlers can't admission these URLs, they can't see the noindex directive. To drop these URLs from the alphabetize and resolve the Indexed, though blocked by robots.txt alarm, you'll want to remove the disallow command for these URLs in your robots.txt file. Crawlers volition then see the noindex directive and drop these pages from the index. This tells you about the part of your site that is healthy. Google has successfully crawled and indexed the pages listed here. Even though these aren't issues, nosotros'll still requite you a quick rundown of what each status means. You submitted the URL for indexing, and information technology was indexed. You wanted the page indexed, then you told Google nigh it, and they totally dug it. Yous got what you wanted, and then get pour yourself a glass of champagne and celebrate! The URL was discovered by Google and indexed. Google found these pages and decided to alphabetize them, simply yous didn't make it equally easy as you could have. Google and other search engines prefer that you lot tell them nigh the content you want to have indexed by including them in a sitemap. Doing so can potentially increment the frequency in which Google crawls your content, which may interpret into higher rankings and more traffic. The URL was indexed. Because it has duplicate URLs, nosotros recommend explicitly mark this URL every bit approved. A indistinguishable URL is an case of a page that is accessible through multiple variations even though it is the same page. Mutual examples include when a page is attainable both with and without a backslash, or with a file extension at the finish. Something like yoursite.com/index.html and yoursite.com, which both atomic number 82 to the same page. These are bad for SEO because it dilutes whatsoever potency a page accumulates through external backlinks between the 2 versions. It besides forces a search engine to waste its resources itch multiple URLs for a single page and can make your analytics reporting pretty messy besides. A canonical tag is a unmarried line in your HTML that tells search engines which version of the URL they should prioritize, and consolidates all link signals to that version. They tin can be extremely beneficial to take and should be considered. These are the pages that Google discovered, but chose to not index. For the most function, these will be pages that you explicitly told Google non to index. Others are pages that you lot might really want to have indexed, but Google chose to ignore them because they weren't constitute to exist valuable enough. When Google tried to index the folio information technology encountered a 'noindex' directive, and therefore did not alphabetize it. If you do non desire the page indexed, you have done so correctly. If yous do want this folio to be indexed, you should remove that 'noindex' directive. This i is pretty straightforward. If you actually wanted this page indexed, they tell you exactly what y'all should exercise. If you didn't want the folio indexed, and then no activeness is required, but you might want to recollect well-nigh preventing Google from crawling the page in the start identify by removing any internal links. This would prevent search engines from wasting resources on a page they shouldn't spend time with, and focus more on pages that they should. The folio is currently blocked by a URL removal asking. Someone at your company straight asked Google to remove this page using their folio removal tool. This is temporary, so consider deleting the folio and allowing it to return a 404 error, or requiring a log-in to access if you wish to continue it blocked. Otherwise, Google may cull to index it over again. This folio was blocked to Googlebot with a robots.txt file. If a page is indexed in search results, but then suddenly gets blocked with a robots.txt file, Google volition typically continue the page in the index for a period of time. This is because many pages are blocked accidentally, and Google prefers the noindex directive as the all-time signal every bit to whether or not you want content excluded. If the blockage remains for likewise long, Google volition driblet the page. This is likely because they volition not be able to generate useful search snippets for your site if they cannot clamber it, which isn't good for anyone. The folio was blocked to Googlebot past a request for authorization (401 response). If you practice want Googlebot to be able to clamber this folio, either remove authorization requirements or allow Googlebot to access your pages by verifying its identity. A common mistake is to link to pages on a staging site (staging.yoursite.com, or beta.yoursite.com,) while a site is nevertheless being built only then forgetting to update those links in one case the site goes live in production. Search your site for these URLs, and prepare them. Yous may demand help from your IT squad to do this if you accept a lot of pages on your site. Crawling tools like Screamingfrog SEO Spider tin aid you scan your site in majority. An unspecified anomaly occurred when fetching this URL. This could mean a 4xx- or 5xx-level response code. This issue may exist a sign of a prolonged issue with your server. Come across if the page is attainable in your browser, and endeavor using the URL Inspection tool. You may want to check with your hosting company to ostend there aren't whatever major issues with the stability of your site. In diagnosing this consequence on many websites, we've establish that the URLs in question are often: If at that place'due south something funny going on with your redirects, you should make clean that up. Brand sure there is only one step in the redirect and that the page your URL is pointing to loads correctly and returns a 200 response. Once you've fixed the issue, be sure to become back and fetch every bit Google then your content will be recrawled and hopefully indexed. If the page returns a 404, brand sure you're not having page speed or server issues. The page was crawled past Google, but not indexed. Information technology may or may not be indexed in the future; no need to resubmit this URL for itch. If you run into this, accept a adept hard look at your content. Does it answer the searcher'southward query? Is the content accurate? Are you lot offer a good feel for your users? Are you linking to reputable sources? Is anyone else linking to it? Make sure to provide a detailed framework of all the page content that needs to be indexed through the utilize of structured data. This allows search engines to non merely index your content but for it to come up up in future queries and possible featured snippets. Optimizing the folio may increase the chances that Google chooses to index it the adjacent fourth dimension it is crawled. Related: Why Isn't My Content Indexed? 4 Questions To Ask Yourself The page was found past Google, but not crawled yet. Even though Google discovered the URL it did not feel it was important enough to spend time crawling. If y'all desire this page to receive organic search traffic, consider linking to it more from within your own website. Be sure to promote this content to others with the hope that you tin earn backlinks from external websites. External links to your content is a signal to Google that a page is valuable and considered to be trustworthy, which increases the odds of information technology existence indexed. This page is a duplicate of a page that Google recognizes every bit canonical, and it correctly points to that canonical page, so cipher for you to practice here! Simply every bit the tool says, there's really aught to practice here. If it bothers you that the same folio is accessible through more than one URL, encounter if there is a manner to consolidate. This page has duplicates, none of which is marked canonical. We retrieve this page is not the canonical i. You should explicitly mark the canonical for this page. Google is guessing which folio yous want them to alphabetize. Don't make information technology judge. Y'all can explicitly tell Google which version of a page should be indexed using a canonical tag. A non-HTML folio (for example, a PDF file) is a indistinguishable of another page that Google has marked equally canonical. Google discovered a PDF on your site that contained the same information every bit a normal HTML folio, then they chose to only alphabetize the HTML version. Generally, this is what you want to happen, so no action should exist necessary unless for some reason you adopt they apply the PDF version instead. This URL is marked equally canonical for a set of pages, but Google thinks another URL makes a better approved. Google disagrees with you lot on which version of a page they should exist indexing. The all-time thing y'all can do is make certain that yous accept canonical tags on all duplicate pages, that those canonicals are consistent, and that you're only linking to your canonical internally. Try to avoid sending mixed signals. We've seen this happen when a website specifies one version of a page equally the canonical, simply then redirects the user to a different version. Since Google cannot admission the version you accept specified, it assumes possibly that yous've made an error, and overrides your directive. This folio returned a 404 error when requested. The URL was discovered by Google without whatsoever explicit request to be crawled. Not found errors occur when Google tries to clamber a link or previously indexed URL to a page that no longer exists. Many 404 errors on the web are created when a website changes its links, but forgets to gear up redirects from the old version to the new URL. If a replacement for the page that triggers the 404 error exists on your website, you should create a 301 permanent redirect from the former URL to the new URL. This prevents Google, and your users, from seeing the 404 page and experiencing a cleaved link. It can also help you maintain the bulk of whatsoever search traffic that had been going to the erstwhile page. If the page no longer exists, go on to let the URL return a 404 error, only attempt to eliminate whatever links to it. Nobody likes broken links. The page was removed from the alphabetize because of a legal complaint. If your website has been hacked and infected with malicious lawmaking, there's a practiced chance y'all'll see a whole lot of these issues bubbling upwards in your reports. Hackers dearest to create pages for illegal moving picture torrent downloads and prescription drugs, which legal departments at large corporations search for and file complaints against. If y'all receive one of these, immediately remove the copyrighted material and make certain your website hasn't been hacked. Be sure that all plugins are upwards to engagement, that your passwords are secure, and that yous're using the latest version of your CMS software. The URL is a redirect, and therefore was not added to the alphabetize. If y'all link to an sometime version of a URL that redirects to a new one, Google volition still detect that URL and include it in the Coverage Study. Consider updating any link using the one-time version of the URL then search engines aren't forced to go through a redirect to discover your content. Deep-Dive Guide: For more than about best practices for redirects: Website Redirect SEO: vii Self-Inspect Questions The page is in the crawling queue; check back in a few days to see if information technology has been crawled. This is good news! Await to see your content indexed in search results before long. Go microwave a bag of popcorn and bank check back in a little bit. Employ this downtime to clean up all of the other pesky problems you've identified in the Alphabetize Coverage study. The page request returns what we recollect is a soft 404 response. In Google's eyes, these pages are a shell of their former selves. The remnants of something useful that one time existed, but no longer does. You should catechumen these into 404 pages, or start populating them with useful content. You submitted this page for indexing, but it was dropped from the index for an unspecified reason. This issue 'south clarification is pretty vague, so it'southward hard to say with certainty what activity you should take. Our best guess is that Google looked at your content, tried information technology out for a while, then decided to no longer include information technology. Investigate the page and critique its overall quality. Is the page thin? Outdated? Inaccurate? Slow to load? Has information technology been neglected for years? Accept your competitors put out something that's infinitely amend? Try refreshing and improving the content, and secure a few new links to the folio. Information technology may lead to a re-indexation of the page. The URL is i of a set of indistinguishable URLs without an explicitly marked canonical folio. You explicitly asked this URL to be indexed, merely because it is a duplicate, and Google thinks that another URL is a meliorate candidate for canonical, Google did non index this URL. Instead, we indexed the canonical that we selected. Google crawled a URL that you explicitly asked it to, but and then you lot also told Google a duplicate of the page was actually the ane it should alphabetize and pay attention to. Decide which version you want to accept indexed, fix it as the approved, and and so endeavor to requite preference to that version when linking to a folio in the set of duplicates both internally on your own website and externally. Equally more and more of the web'southward traffic and daily searches occur on mobile devices, Google and other search engines have given increased focus on the importance of mobile usability when determining a page's rankings. The Mobile Usability study in Google Search Console helps yous quickly identify mobile-friendliness problems that could be pain your user's feel and property your website dorsum from obtaining more organic traffic. In the section below we walk through some of the most common problems detected in this report, and how you might work to resolve them. The page includes plugins, such equally Flash, that are not supported by nigh mobile browsers. We recommend designing your await and feel and folio animations using modern web technologies. In 2017, Adobe announced it would stop supporting Flash by the stop of 2020. This was one of the final nails in the coffin of Flash, which wasn't mobile-friendly and plagued with security issues. Today, there are ameliorate open web technologies that are faster and more than power-efficient than Wink. To fix this consequence, you'll either need to replace your Wink element with a modern solution like HTML5, or remove the content birthday. The page does not define a viewport property, which tells browsers how to conform the page's dimension and scaling to accommodate the screen size. Because visitors to your site utilize a variety of devices with varying screen sizes—from big desktop monitors, to tablets and small smartphones—your pages should specify a viewport using the meta viewport tag. The "viewport" is the technical way that your browser knows how to properly scale images and other elements of your website so that it looks great on all devices. Unless your HTML savvy, this will likely require the help of a developer. If y'all want to take a stab at it, this guide might be helpful. The page defines a fixed-width viewport holding, which means that information technology tin can't arrange for different screen sizes. To fix this error, prefer a responsive design for your site'southward pages, and set the viewport to match the device'south width and scale accordingly. In the early on days of responsive-blueprint, some developers preferred to tweak the website for mobile-experiences rather than making the website fully responsive. The fixed-width viewport is a nifty way to do this, but as more and more mobile devices enter the market place, this solution is less appealing. Google at present favors responsive web experiences. If y'all're seeing this issue, information technology's likely that y'all're frustrating some of your mobile users, and potentially losing out on some organic traffic. It may be fourth dimension to telephone call an agency or hire a programmer to make your website responsive. Horizontal scrolling is necessary to see words and images on the pageThis happens when pages use absolute values in CSS declarations, or use images designed to look best at a specific browser width (such as 980px). To fix this fault, make sure the pages utilise relative width and position values for CSS elements, and make sure images can scale as well. This usually occurs when in that location is a single image or element on your folio that isn't sizing correctly for mobile devices. In WordPress, this tin usually occur when an image is given a caption or a plug-in is used to generate an element that isn't native to your theme. The piece of cake mode to fix this issue is to only remove the image or element that is not sizing correctly on mobile devices. The correct way to fix it is to alter your lawmaking to make the element responsive. The font size for the page is as well small to exist legible and would require mobile visitors to "pinch to zoom" in society to read. After specifying a viewport for your web pages, set your font sizes to scale properly within the viewport. Simply put, your website is too difficult to read on mobile devices. To experience this starting time-hand, simply load the folio in question on a smartphone and feel it first hand. According to Google, a good dominion of thumb is to have the page brandish no more than 70 to lxxx characters (most 8-10 words) per line on a mobile device. If yous're seeing more than than this, you should hire an agency or developer to alter your code. This study shows the URLs for sites where touch elements, such as buttons and navigational links, are so close to each other that a mobile user cannot easily tap a desired element with their finger without also tapping a neighboring chemical element. To fix these errors, make certain to correctly size and space buttons and navigational links to be suitable for your mobile visitors. Ever visit a not-responsive website that had links and then small that it was IMPOSSIBLE to utilize? That'south what this outcome is all nearly. To come across the issue first-manus, endeavor loading the page on a mobile device and click around as though you were one of your customers. If it's hard to navigate from one page to another, and the experience requires yous to zoom in before you lot click, you can be certain that y'all accept a problem. Google recommends that clickable elements have a targeted impact size of near 48 pixels, which is usually virtually 1/3 of the width of a screen, or most the size of a person'south finger pad. Consider replacing small text links with large buttons as an easy solution. It'south important that y'all identify and set whatever problems that may interfere with Google's power to crawl and rank your website, but Google likewise takes into account whether your website provides a proficient user experience. The Core Web Vitals report in Google Search Console zeroes in on folio speed. It focuses on key page speed metrics that measure the quality of the user experience so that you can hands identify any opportunities for improvement and make the necessary changes to your site. For all of the URLs on your site, the report displays iii metrics (LCP, FID, and CLS) and a condition (Poor, Needs Improvement, or Skilful). Here are Google's parameters for determining the status of each metric. Permit's take a look at each of the three metrics, and how to improve each one. Largest Contentful Paint (LCP) refers to the amount of time it takes to display the page's largest content chemical element to the user afterward opening the page. If the principal element fails to load within a reasonable amount of time (under 2.5s), this contributes to a poor user feel seeing that cipher is populating on-screen. First Input Delay (FID) is a measure out of how long it takes for the browser to respond to an interaction by the user (like clicking a link, etc.). This is a valuable metric as it'southward a directly representation of how responsive your website is. A user is likely to get frustrated if interactive elements on a folio take too long to answer. Cumulative Layout Shift (CLS) is a measure out of how often the layout of your site changes unexpectedly during the loading phase. CLS is important because layout changes that occur while a user is attempting to collaborate with a folio tin can be incredibly frustrating and result in a poor user feel. A simple way to check these metrics on the page-level and quickly identify fixes is to utilize Chrome DevTools. Subsequently identifying a folio with an event within Google Search Panel, navigate to that folio URL and printing Control+Shift+C (Windows) or Command+Shift+C (Mac). Alternatively, you can right-click anywhere on the page and click on "Inspect." From there, you'll want to navigate to the "Lighthouse" menu pick. Lighthouse will audit the page and generate a report, scoring the performance of the page out of 100. You'll find measurements for LCP, FID, and CLS, in addition to other helpful page speed metrics. The best part of Google Lighthouse is that information technology provides actionable recommendations for improving the above metrics, along with the Estimated Savings. Piece of work with your developer to implement the recommended changes where possible. Every bit you lot navigate through each issue, y'all'll detect that clicking on a page'southward URL will open a new panel on the right side of your screen. Go through this list and make sure you're happy with what you come across. Google put these links hither for a reason: they want yous to use them. If you lot're notwithstanding confident the issue is resolved, you lot can cease the process by clicking VALIDATE Ready. At this bespeak, you'll receive an e-mail from Google that lets y'all know the validation process has begun. This will take several days to several weeks, depending on the number of issues Google needs to re-crawl. If the upshot is resolved, there'southward a practiced chance that your content will get indexed and y'all'll first to show up in Google search results. This means a greater chance to drive organic search traffic to your site. Woohoo! You're a search-engine-optimization superhero!Getting Started With Search Console

Domain Verification

Navigating the Index Coverage Study

Google Search Panel Errors List

How To Fix A Server fault (5xx):

How To Fix A Redirect error:

How To Solve:

Submitted URL blocked by robots.txt:

How To Solve:

Submitted URL marked 'noindex':

How To Solve on Wordpress:

How To Solve on Hubspot:

Submitted URL seems to be a Soft 404:

Submitted URL returns unauthorized asking (401):

Submitted URL not plant (404):

How To Solve:

Submitted URL has crawl event:

Google Search Console Warnings List

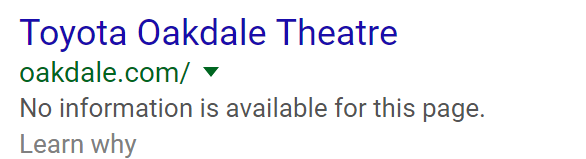

Indexed, though blocked by robots.txt:

Blocked By Robots

Google Search Console Valid URLs Listing

Submitted and indexed:

Indexed, non submitted in sitemap:

Indexed; consider marking every bit canonical:

Google Search Console Excluded URLs

Blocked by 'noindex' tag:

Blocked by page removal tool:

Blocked by robots.txt:

Blocked due to unauthorized request (401):

Crawl anomaly:

Crawled – currently non indexed:

Discovered – currently not indexed:

How To Solve on Google Search Console:

Alternate folio with proper canonical tag:

Indistinguishable without user-selected canonical::

Duplicate non-HTML page:

Duplicate, Google chose different canonical than user:

Not found (404):

Folio removed considering of legal complaint:

Folio with redirect:

Queued for crawling:

Soft 404:

Submitted URL dropped:

Duplicate, Submitted URL non selected as approved:

How To Navigate the Mobile Usability Report

When your page uses incompatible plugins

Viewport not set

Viewport not set to "device-width"

Content wider than screen

Text too pocket-sized to read

Clickable elements too close together

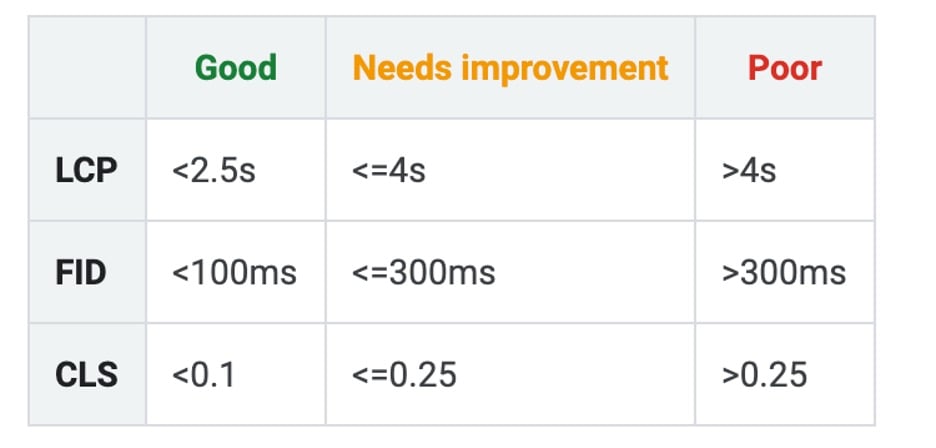

How To Navigate the Core Web Vitals Report

Largest Contentful Paint (LCP)

First Input Delay (FID)

Cumulative Layout Shift (CLS)

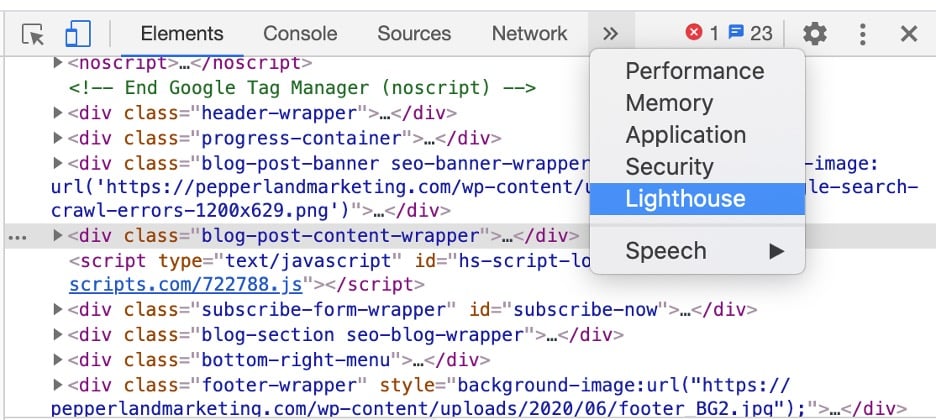

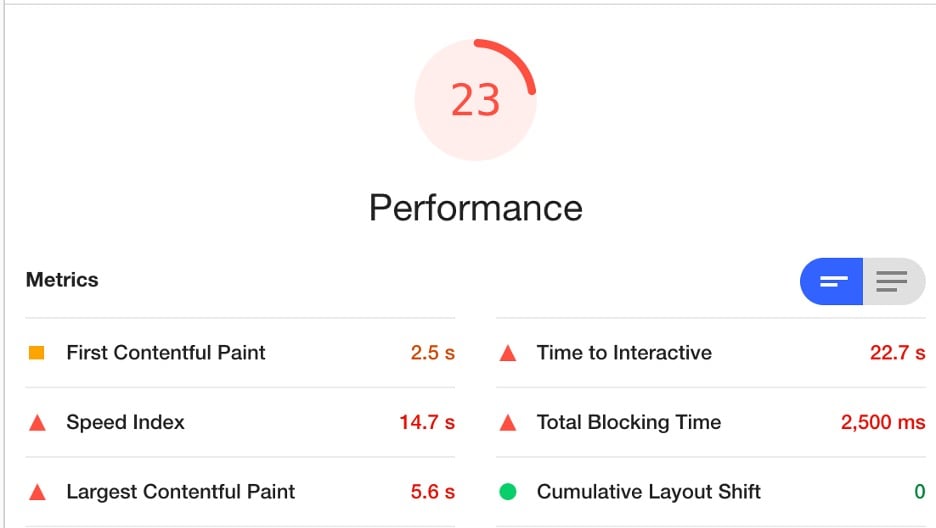

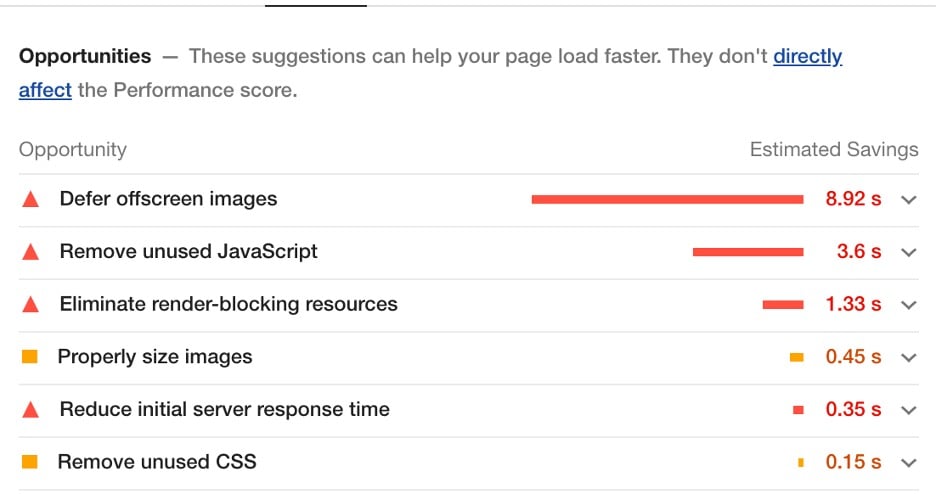

Improving Core Web Vitals

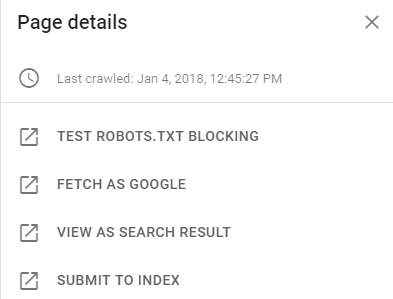

How To Tell Google You've Fixed An Upshot

Source: https://www.pepperlandmarketing.com/blog/google-search-console-errors

0 Response to "Google Is Crawling a Bad Review From a Site That Is No Longer Available"

Post a Comment